This post is written by Alexander, hacker extraordinaire, who rewrote the Spy backend from scratch. Next time you’re in Portland, kindly buy him a beer.

Clicky is almost 6 years old and hence was one of the first real-time web analytics platforms. Spy is an important part of that offering. Spy allows you to glimpse important information about active visitors on your website, as that information flows out of your visitors’ browsers and into our system.

Spy has been a part of Clicky since the very beginning and has always been one of the most popular features. The name and functionality were both heavily influenced by “Digg Spy”. Sean, our lead developer, says that when he saw Digg Spy circa 2005, he thought to himself “how sweet would it be if you had that kind of real time data stream, but for your own web site?” That was in fact one of his motivations to create Clicky in the first place.

Since 2006, we’ve grown by leaps and bounds, and many parts of our service have had to absorb the shocks of scale. Recent tweets about our major data center move and infrastructure changes confirm this, but much is afoot at a deeper level than that meeting the eye.

Spy has gone through multiple complete rewrites to get to where it is today. We thought some of you might find it interesting.

Initial implementation

The first version of Spy was drafted in a few days. Back then, all infrastructure components for Clicky were located on a single machine (yikes).

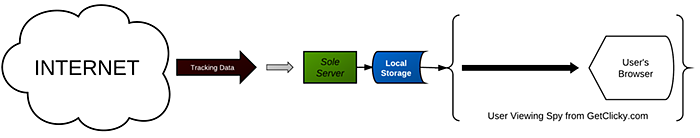

Incoming tracking data was simply copied to local storage in a special format, and retrieved anew when users viewed their Spy page. It was extremely simple, as most things are at small scale, but it worked.

Spy, evolved

By mid-2008, we had moved to a multi-server model: one web server, one tracking server, and multiple database servers. But the one tracking server was no longer cutting it. We needed to load balance the tracking, which meant Spy needed to change to support that.

When load balancing was completed, any incoming tracking hit was sent to a random tracking server. This meant that each dedicated tracking server came to store a subset of Spy data. Spy, in turn, had to open multiple sockets per request to extract and aggregate viewing data. Given X tracking servers, Spy had to open X sockets, and perform X^2 joins and sorts before emitting the usage data to the user’s web browser.

Initially the Spy data files were served via Apache from all of the tracking servers, but that tied up resources that were needed for tracking. Soon after this setup, our first employee (no longer with us) (Hi Andy!) wrote a tiny python script that ran as a daemon on each tracking server. It took over serving the Spy files for the PHP process on the web server that was requesting them. This helped free up precious HTTP processes for tracking, but there was still a lot of resources being wasted because of the storage method we were using.

Tracking servers had no mechanism for granularly controlling the memory consumed by its Spy data. Therefore, each tracking server had a cron job that would indiscriminately trim its respective Spy data. Sometimes, however, this mechanism would grow out of control, so trims happened randomly when users viewed their Spy page. These competing mechanisms and their implementations presented an opportunity for datum linearity issues to arise occasionally.

The number of websites we tracked continued rising, and we made several changes to this implementation to keep resource usage in check. Among them was the move to writing Spy data to shared memory (/dev/shm) instead of the hard drive. This helped a great deal initially, but as time wore on, it became clear that this was just not going to scale much further without a complete rethinking of Spy.

Trimming the fat

In the end, we decided to reimplement Spy’s backend from the ground up. We devised a list of gripes that we had with Spy, and it looked like this:

*N tracking servers meant N sockets opened/closed on every request for data

*Data had to be further aggregated and then sorted on every request

*Full list of Spy data for a site was transmitted on every request, which consumed massive bandwidth (over 5MB/sec per tracking server on internal network) and required lots of post-processing by PHP

Our goals looked like this:

*Improve throughput

*Improve efficiency

*Reduce network traffic

*Reduce per-request and overall resource usage

Enter Spyy

The modern version of Spy, called “Spyy” to fit in with our internal naming conventions, is implemented in two custom daemons written in C. The first runs on each tracking server and dumbly forwards incoming Spy data to the second daemon, the Spy master. Data travels over a persistent socket established by ZeroMQ.

The Spy master daemon stores the information efficiently for each site, using a circular buffer whose size is determined and periodically reevaluated by a site’s traffic rate. This removes the need to manually trim any data from a site as we’ve been doing for years. Once the buffer is filled for a site, old data is automatically purged as new data arrives. When we read data from the new daemon, it gives us only the data we need (basically, since timestamp X) instead of “all data for site X”, drastically reducing network bandwidth and post-processing needed to be done by PHP.

Like the previous version of Spy, all data is stored in RAM to ensure peak performance. Because of this, however, the data is still volatile. We have other mechanisms for permanently storing tracking data, and Spy data excludes much information from what is permanently stored. This means that when we implement new features or apply bugfixes to Spyy, it must be killed and started anew.

To mitigate this blip in the availability of data, we have implemented a rudimentary transfer mechanism between the existing Spy program and a new one coming online to take its place. This mechanism also uses ZeroMQ and, basically, drains datums from the existing process to the new process. At completion, the old process shuts itself down and the new process claims its occupied network interfaces.

Conspycuous benefits

We met our goals, and then some. The overall project took about 8 weeks.

Benchmarks at the start of the project demonstrated the old Spy implementation barely able to keep up at peak load, tapping out at about 5,000 reqs/sec. In comparison, the new implementation can handle upwards of 50,000 reqs/sec on the same hardware.

In the old architecture, read performance decreased as the number of tracking servers increased. In the new architecture, read performance is unaffected by the number of tracking servers, and is roughly 2N times better than the old architecture, assuming at least 1 tracking server N. Write performance in both cases is constant.

RAM usage for storing Spy data was approximately 4GB per tracking server under the old architecture. At the time we had 5 tracking servers which meant 20GB total (we have 7 tracking servers now). New Spy, on the other hand, only uses up 6GB total RAM on a single virtual machine, and it takes up so little CPU power that the same server hardware also hosts one tracking server and four database servers without issue.

Bandwidth wise, the old Spy used over 20MB/sec of combined bandwidth for read requests from the tracking servers. New Spy? About 500KB/sec on average, reducing network footprint to barely 1% of what it was before.

In the event of a Spy master server outage, our tracking servers simply drop Spyy-bound packets automatically and continue persisting other data to disk, with absolutely no performance impact.

Because of the way that ZeroMQ is implemented, we can scale this architecture very readily and rapidly. It removed a lot of application complexity and let us focus on the implementation. With ZeroMQ, business logic itself drives the network topology.

Additionally, because of ZeroMQ, we can easily segment sites out onto different Spy “masters” with little change to the rest of Clicky, should the need or desire arise. In fact, we already do this because of some legacy white label customers (which we, of course, thank for the challenge provided by their existence in this implementation).

As stated above, redundancy is not a goal of ours for Spy, because of the volatile and transient nature of its data. But if we ever change our mind, we can simply set up a ZeroMQ device between the tracking servers and Spy master, and have each datum be split off to N master servers.

Overall, we are extremely happy with the improvements made. We are also very impressed with ZeroMQ. It has been getting quite a bit of hype recently, and in our opinion it lives up to it.